Host Your Stuff - Part VII

Lasers, linecards and Linuxes - Making all your computers talk. Usually.

Essentials of Networking for Self-Hosting

A capable, stable internal network will be crucial for a reliable self-hosting experience and will form the backbone of your home server environment. Upgrading from the all-in-one ISP provided box will be a good first step and the more advanced capabilities of professional or enterprise networking equipment can be the difference between a fifteen minute job and days of troubleshooting.

The big upgrades that should be made are:

- A new firewall/router

- A managed switch

- The right cabling and supporting equipment

These will allow you to build out your network to serve whatever needs you have and swap out components as needed without replacing the entire network at once. This will typically be more expensive than your standard TP-Link all-in-one from a big box store, but may not be as expensive as expected. We'll dive into a few different vendors and their advantages or disadvantages, what considerations should be kept in mind and what on earth an L3 Switch is.

So what is a network anyway?

A network consists of the devices and connections between different computers and the standardized ways they communicate. The network inside your home now likely takes the form of wired Ethernet and wireless WiFi which allows your various devices to communicate with each other and the outside world. The communications can be broken into seven layers, formally known as the Open Systems Interconnection (OSI) Model. We'll only deal with layers 1-3 and touch on 7 today.

Layer 1 - Physical Layer

The physical layer consists of the actual cables, connectors and wireless signals that each computer uses to connect to the network. Today this most commonly takes the form of twisted pair copper cable, 802.11 WiFi and fiber optic cables. The physical connections will determine what sort of devices the computer can connect to directly, what speeds it can talk at and inform the physical layout of the network.

Twisted Pair Cabling

Twisted Pair cables, generally called Category 5 (Cat5) or Category 6 (Cat6) cables (which refers to a distinct specification for these cables), are likely the most familiar physical layer wired connection most people interact with. The name "Twisted Pair" comes from their internal design which consists of four pairs of wires with each pair twisted together. This is intended to reduce cross-talk and interference and is a critical part of the design for these cables.

These cables are fairly durable and can easily be bent around obstacles making it a good choice for running in more confined spaces or where tight bends are involved.

These cables range from 100 Megabit through 10 Gigabit depending on the exact rating and revision. All of these cables are rated for their speeds at 100m, unless specified otherwise.

- Category 5: Cat5 cabling is rated for 100Mb and are typically found with much older equipment and isn't terribly useful here.

- Category 5e: A revision to Cat5 and typically the most common CatX cable seen. These cables support up to 2.5Gb, but are most commonly used for 1Gb. These are inexpensive, ubiquitous and will likely serve as the mainstay of a home network.

- Category 6: These cables are also rated for 1Gb over their full distance, but can support 10Gb at up to 30-55m.

- Category 6A: Cat6A improves on Cat6 with thicker shielding and can support 10Gb at up to 55m. Due to the shielding, these cables are thicker, more rigid and more expensive. These are best suited to industrial environments or other uses where interference is a bigger factor.

- Category 7 and 8: Snake oil peddled by vendors looking to upcharge Cat6A. They claim to support 25, 40 and 100Gb over some distance, however there are no transceivers designed to use these cables at that speed. Feel free to buy it if you enjoy the smell of burning cash, otherwise skip right to DACs and fiber optic cabling.

These cables are terminated into connectors called "RJ-45" which is the cable-end of the familiar "Ethernet port" on many computers. These cables can easily be made at home with an RJ-45 crimper, some tips and a length of cable. This will take a bit of practice, but can save you some time and money when making custom cable runs, particularly for very short or very long cables. Depending on the amount of cables you need to run, patience for crimping cables and budget, a 500 or 1000ft reel of Cat5e can be handy to have around. Personally, I've gone through about two of those 1000ft reels in six years and have cabled and re-cabled a dozen or so full racks and part of two homes with them. This can also allow you to re-use old cables that may not be the right length and is a useful tool to have on hand for networking.

The specific wiring standard is called TIA/EIA 568A or TIA/EIA 568B. Which standard to use is somewhat region dependent with TIA 586B being common in North America, other regions may have their own preferences. Check the other cables around you and use that standard, just be consistent. Or don't, I'm not going to come into your home and rewire everything. Preferences for TIA/EIA wiring standards is an excellent way to ignite a flamewar similar to asking what everyone's favorite text editor is. I trust that you, dear reader, will use this knowledge only for good.

Fiber Optic Cables

Fiber optic cabling is fundamentally different from copper cabling, using light instead of electrical signals to transmit data over a cable. This allows for a much further reach for these signals and dizzying speeds at the top end. These will often be the go-to solution for cabling going over 2.5Gb and can scale all the way to terabits over a pair of fibers. The two main modes of operation are Single Mode Fiber (SMF) and Multi-Mode Fiber (MMF) which use different cables, optics and have subtly different characteristics for actual employment. Typically, MMF is used for shorter (300m and less) runs at 100Gb or less. These fibers are typically plastic internally and use cheaper LED optics making MMF cheaper over all, slightly more durable and typically suitable for home use. Conversely, SMF uses thinner glass fiber and more precise, higher power lasers in the optics. This does result in more expensive and fragile cables but allows for very long distance transmissions. Single Mode Fiber also allows for substantially higher maximum data rates (800Gb being the fastest commodity NIC available at time of writing) over a single fiber and high density fiber optic networking like multiplexing, Passive Optical Networks and other more exotic systems.

Multi-Mode Fiber Speeds and Feeds:

OM1 and OM2: Legacy standards for older networks, can provide up to 10Gb at 33 and 82m respectively, but have no practical use as OM3 is cheap, plentiful and a comprehensive upgrade. Identifiable through an orange plastic jacket.

OM3 and OM4: The mainstay of 10Gb and 40Gb networks, can provide 10Gb at 300 and 550m respectively as well as supporting 40 and 100Gb at 100 and 150m respectively. Identifiable through an aqua plastic jacket. These cables are currently some of the most common. OM4 is recommended over OM3 given they have a small price difference and tend to more consistently pass 25Gb and 100Gb at over 100m. OM4 will sometimes have a pink plastic jacket.

OM5: The current top end of the multi-mode spec, supporting 40Gb at 440m and 100Gb at 150m. These are going to be rare sights in most environments with a substantial price premium over OM4 and a questionable benefit versus single-mode fiber. These are commonly identified by a green plastic jacket. OM5 also supports SWDM (Shortwave Wavelength Division Multiplexing), a multiplexing technology allowing multiple wavelengths to transmit over a single fiber, increasing the aggregate throughput of a single physical cable.

Fiber Optic Transceivers

A fiber optic cable on its own does not provide much use and needs a transceiver to send and receive light over these cables. This is where transceivers come in to play and will be the interface between the network card and the physical cabling. These transceivers come in three main packages: SFP (Small Form-factor Pluggable), QSFP (Quad SFP) and OSFP (Octuple SFP) which will have different physical sizes as well as maximum data rates. Each group of transceivers will typically have a suffix attached which indicates the maximum speed of the physical interface (PHY).

SFP Suffixes:

- (None) - 1Gb per channel, meaning an SFP can do 1Gb, QSFP can do 4Gb. These are generally legacy transceivers or used for special purposes.

- (+) - 10Gb per channel, SFP+ provides 10Gb, QSFP+ can do 40Gb

- 28 - 25Gb per channel with SFP28 (25Gb) and QSFP28 (100Gb)

- 56 - 50Gb per channel. Generally only seen as QSFP56, QSFP56-DD and OSFP56. QSFP56 provides 200Gb, the -DD suffix indicates "Double Density" instead using eight channels and providing 400Gb. This is distinct from OSFP56 which also uses 8 channels and provides 400Gb, but uses a separate form factor.

The most common that will be seen in a lab will typically be SFP+, SFP28 and QSFP28. QSFP+ used to be common, but is something of a "dead end" technology and is rapidly being replaced by the more broadly compatible QSFP28.

Backwards Compatibility

In most cases, older standards for transceivers can be used in newer cages (the receptacle for the SFP) so an SFP+ transceiver will fit and typically work in an SFP28 cage, however an SFP28 transceiver will generally not work in an SFP+ cage. This is also true for the larger QSFP transceivers.

There is a substantial caveat to this: Switches and routers will often have their ports combined into groups of four on the hardware level, typically abstracted away from the end user. The observant reader may note that this aligns with the QSFP variants of a port. Does this mean all switches are really QSFP ports broken out to the physical ports? Sometimes that's exactly what's happening on some switches. Practically speaking, the primary difference is that if ports need to be changed to a lower speed (For example: changing 25Gb ports to work at 10Gb) it will need to be done in groups of four in line with the underlying port configuration. This typically comes in to play on larger, higher capacity switches.

Direct-Attach Copper Cable

Direct-Attach Copper Cables, or DACs, are entirely passive cables for SFP cages that provide a low power, low latency, short distance connection between two devices. Instead of taking the signaling from the SFP cage and translating it over to Cat(something) cable or fiber optic signaling, these cables directly transfer the signal between the two devices. This has a nominal, but present decrease in latency and consume less power than other cabling. The main cost with these cables is that they are all-in-one units with the SFP interface and connection being one solid piece. This makes repairs of the cable functionally impossible versus replacing just one transceiver or the cable with other interfaces. The maximum distance for these cables is also much shorter with 10m being generally the longest available. The biggest advantage is that these cables are often cheaper than short distance runs of fiber while consuming about half the power and 5-30x less power than RJ45 cabling.

A somewhat related cable option is the Active Optical Cable which functions the same as a DAC, but uses fiber optics between the transceivers. These are typically found for longer distance, specialty connections where traditional Ethernet doesn't work. Personally I've usually seen these for High Performance Computing interconnects like Mellanox (now Nvidia) Infiniband and Intel OmniPath as well as PCIe fan-outs for IBM POWER8 servers. These are fairly uncommon, but are worth mentioning as they typically signify something quite specific and potentially interesting is happening.

So What Do I Pick?

This choice primarily comes down to the specific purpose and data-rate involved. However, some general advice:

- 1GbE and 2.5GbE: Cat5e

- Networking <10m: DACs

- These cables are fairly cheap and the distances inside a rack are short enough to be well within the maximum distances. The lower power draw can be good to keep switch power use lower (and therefore heat). Cat5E will be entirely sufficient for out-of-band management and is cheap.

- Runs >10m: Multi-mode Fiber

- These longer runs will benefit from the forward compatibility for cabling and are generally going to be difficult enough to replace that a one-and-done run is likely best.

- Structured Cabling: Single-mode Fiber

- Any particularly difficult to run cabling (and therefore rarely replaced) should be done with cabling that will support both current and long-term future upgrades. Single-mode fiber allows for a wide variety of throughputs and modern SMF will be entirely sufficient.

Layer 2: Data-Link Layer

This layer deals with packets being switched within a single local network. The primary purpose of this part of the network will be to expand out the "reach" of the next layer to more devices. The equipment used at this layer primarily involves switches, but has some relevance to wireless access points and some related technology. Solid, dependable switches will be the backbone of a good network and some upfront investment can pay dividends down the road. We'll go through what a switch is and how it works, some example use cases, hardware recommendations and compare a few key models ranging from a low power, unmanaged switch up through fire-breathing, multi-terabit Aristas to illustrate particular points.

The central idea of Layer 2 of the OSI model, and it's relevance here is to allow devices to communicate within a local network and how you can define what that network looks and acts like. In a typical home network, the combo modem/router/access point/switch makes up the entire infrastructure for the network, however we're going to be breaking that out into specific hardware, starting with a switch.

Fundamentals of a network switch

A good switch will generally be used to add more ports and host-to-host capacity for a network, will have as many or more ports than a router and be able to pass that traffic around at the full speed of each port without issue. A switch will have, at minimum four main components:

- Physical Ports: A switch is no good without the ability to plug something into it and the ports (and what speed they are) is generally the big differentiating factor within a single product line. The key things to keep in mind will be ensuring that you're getting at minimum enough ports, have the higher capacity ports you need to add more switches later and use compatible interfaces for your devices.

- A Switching ASIC: The ASIC or Application Specific Integrated Circuit is the heart of a switch's capabilities and is a core requirement for a usable switch. These ASICs are limited function, but very high-speed processors tailored for a small set of purposes. The capabilities of an ASIC will heavily determine what features the switch has and how fast it can fulfill them. These ASIC capabilities are a large part of what makes the difference between a $400 Unifi 48 port gigabit switch and a $10,000 Arista 48 port gigabit switch. The key features to look for will be the total non-blocking switching capacity, support for features you need or may want and port-to-port latency. We'll get into what these specs and features mean shortly.

- Control Plane: This component serves as the "brains" of a switch and is what manages the ASIC as well as handling the tasks an ASIC may not handle. This control plane is typically a normal x86_64 or ARM processor that connects to the ASIC and management ports. While this control plane is capable of handling some packet processing it will be slower and lower capacity than pushing traffic through the ASIC. This primarily comes in to play with more advanced switches capable of doing routing.

- Network Operating System: Since switches are, in effect, highly specialized computers, they also run an OS. While one won't find Windows or MacOS on a switch, highly custom versions of Linux, the BSDs or something bespoke are common. This will generally be proprietary and specific to the vendor and sometimes specific product lines, they do generally fall within a few big categories that we'll go over later.

Managed vs Unmanaged Switches

One of the key differentiating features between basic small office, home office switches and their pro-sumer, enterprise and carrier grade cousins is the management of the switch. A basic, 5-port Netgear gigabit switch is perfectly capable of handling gigabit traffic across each port and will happily do so all day. However, that's about all it will do. Once you start wanting to mess with VLANs (a separate article, Coming Soon TM), need to manually set port speed or mess with link aggregation and other more advanced features that management becomes a necessity.

There are differing levels of manageability to these switches, ranging from simple web UIs found on the higher end Netgear switches, more capable web UIs from Unifi and accompanying mobile apps or fully programmable command line and REST API interfaces from enterprise/carrier vendors like Cisco, Arista, Mikrotik and Juniper.

For a homelab or self-hosting network, a proper managed switch is a must as many later projects will either require one or need substantial workarounds and hacks to achieve the same functionality.

Layer 2 vs Layer 2.5 vs Layer 3 switches

Switches are generally considered as being layer 2 devices, however many vendors will advertise layer 3 switches with some advertising layer 2.5 switches. So what's this all about? How do you end up with half a layer?

Since the early 2000s, enterprise switches have also been able to handle simple routing and have been seen as a way to increase the capacity of the big, expensive routers that need to handle more advanced configurations. This has lead to the rise of the term "Layer 3 Switch" or "Multi-layer Switch" and is now a basic requirement for serious switches. These won't replace your router and firewall at the edge of the network, but can massively increase the capacity of a network to handle more complex topologies and enable a more flexible network.

Enterprise and more "serious" switches are able to handle both static and dynamic routing (Don't worry, we'll touch on that in the Layer 3 section) the same as big, core routers but are limited in the number of routes they can handle. These are typically the minimum features required to be considered an L3 switch. However, some vendors don't implement all or some of these features and will ship their stripped down products as "Layer 2.5" switches. While this has nothing to do with protocols that sit awkwardly between switching and routing like MPLS, it typically represents those not-quite-basic-L2 but not-quite-full-L3 switches from the likes of Ubiquiti, Netgear and other small office, home office (SOHO) vendors.

Personally, I treat any switch not capable of handling multiple dynamic routing protocols as a simple L2 only switch as wrangling static or connected-only routes ends up being more trouble than it is worth. While this doesn't make them bad devices, I certainly won't pay more for L2.5 over pure L2 and would rather just get something fully featured.

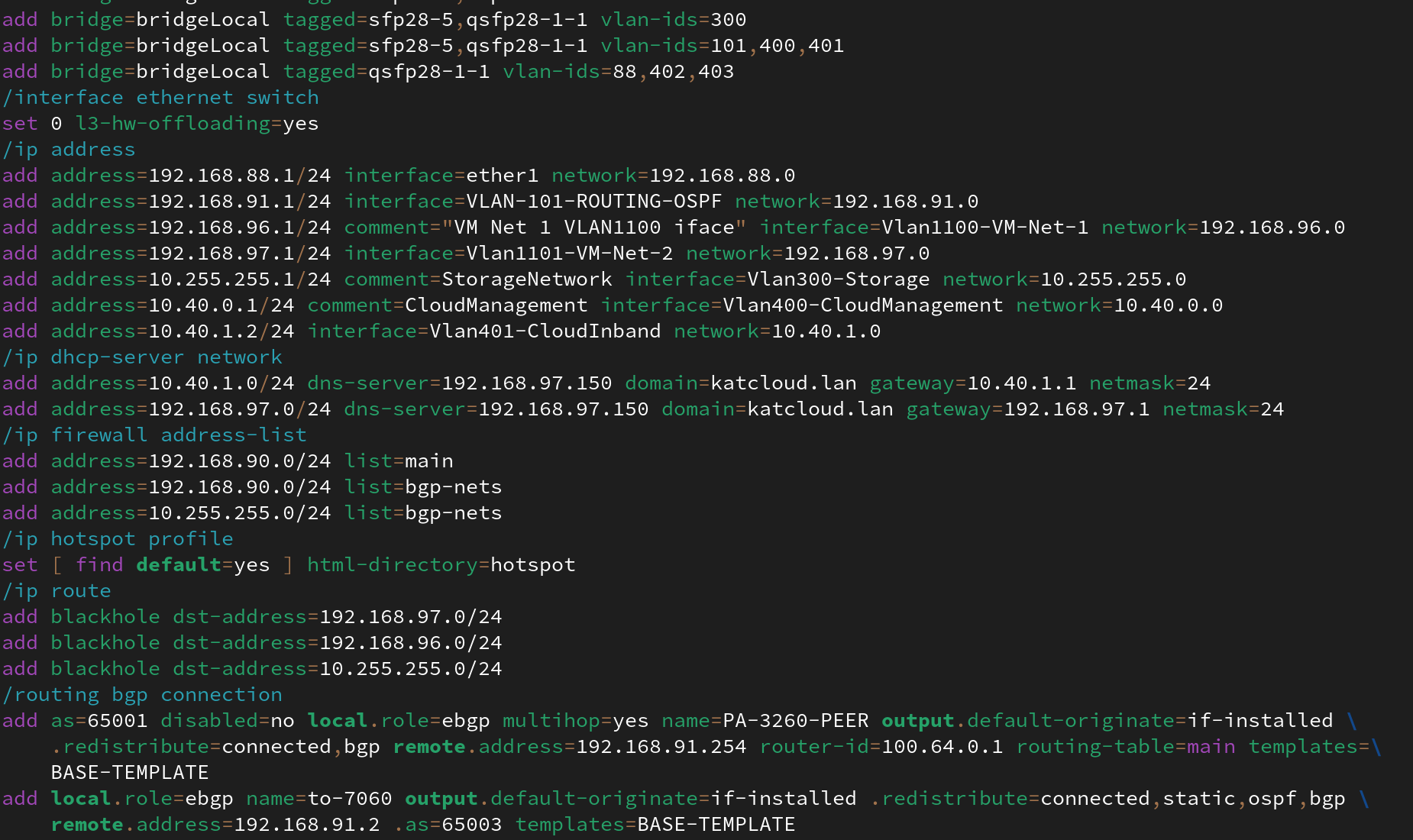

Is it a switch or a router? It can do both! (Screenshot from my Mikrotik CRS-518 config)

As shown above, there's bits of that config referencing VLAN configurations (firmly Layer 2 concepts) and IP addressing for some VLAN interfaces which aren't entirely out of the question for a more basic switch, however later in the config, that switch is also running eBGP (External Border Gateway Protocol) which is a foundational component of routing traffic across the broader internet. So how is this possible?

It all comes down to the ASIC and Network Operating System mentioned earlier. When configured properly, this switch is able to move traffic at L2 or L3 at line-rate without issues due to the features supported by the ASIC and those features exposed through the OS.

So what sort of switch do I need?

I'm going to take a hard and likely unpopular line here: A fully managed enterprise/carrier grade L3 switch. They're marginally more expensive than the managed L2 only or partial L3 switches and are more complex to configure at times, however they are not going to kneecap you the moment you try and get fancy.

My recommendations for vendors and product lines are the Mikrotik CRS3xx or CRS5xx series switches (Ignore the CSS lines and CRS1xx switches) as well as Arista for more datacenter/big iron switches. Juniper can be another solid option, and while I don't have any personal experience with them, a few friends are very pleased with their Junipers.

Mikrotik has quite a learning curve and, despite the price tag, is generally targeting ISPs, campus wireless deployments and other professional settings where a high degree of control is required. This control does come with a higher level of complexity and a loss of some layers of abstraction that many other vendors give. Feature support and exact hardware capabilities vary greatly between products with small pocket routers and switches like the hEX S appearing to be little different from your basic, unmanaged switch up through hardware-offloaded VXLAN software-defined networking at 100Gb+ on the bigger CRS5xx or CCR22xx switches and routers. Understanding the product's capabilities will be key, and the topic of a future article. They tend to come in at a highly competitive price tag, particularly for the capabilities. Some fan-favorites are devices like the RB5009UPr, a 1x10GbE SFP+, 1x2.5GbE, 7x1GbE router (with full switching ASIC too) and Power over Ethernet in or out on each copper port as well as the CRS309, an 8x10GbE SFP+ fully passively cooled switch capable of line-rate switching and routing at a handful of watts. I've also become quite a fan of the CRS326 1GbE switches for much the same reason. 24x1GbE, 2x10GbE SFP+ and full L3 that's passively cooled and, like the CRS309, can be powered over PoE and consumes just a handfull of watts. One of the major caveats beyond the learning curve will be the control plane. Part of the trade-off you make for the lower power consumption is a fairly weak control plane. This generally means that anything that isn't hardware offloaded will be much slower and more limited capacity than a bigger switch. The routers are typically much better about this, but is something to keep in mind if you intend to get particularly fancy.

Arista Networks was founded by some Cisco Nexus people asking the question "What if we did Cisco Nexus but good?" and the answer with Arista EOS and the DCS switches. These will be very familiar to people who have used Cisco gear before, however they actually adhere to standards, don't have predatory licensing and are less restrictive with feature-sets, optics and similar. These are getting into your big-iron, high speed switches and can handle a massive amount of traffic. You do pay for this in power consumption and noise, but if you don't need to be right next to the thing? Worth it. One of the very nice parts of EOS is that it is just Linux under the hood. Need some feature the switch doesn't natively support? Throw up a Docker container or VM. Need to debug something not readily exposed through the Cisco-like CLI? Drop in to a Bash shell and troubleshoot just like your desktop or servers. They're also widely supported for hardware offloads for projects like OpenStack and Kubernetes where the ASICs can be leveraged to massively decrease processor overhead on your nodes for network traffic and tightly integrate your workload orchestration with the network. These switches form the core of the heavy-duty packet slinging in my network and many others, often best suited to high speed, low latency routing and switching with high port densities, highly capable offloads and screaming fans to match. I wouldn't put one on my desk, but they'll absolutely serve you well in a rack.

I would avoid Cisco if you can get away with it, they have a nasty habit of implementing not-quite-the-real-standard for some pretty core features like VLAN tagging, Link Aggregation and have a number of Cisco-specific protocols that make interoperability trickier than it needs to be. I've run some Cisco Catalyst switches in the past and they're perfectly fine. As long as your whole network is Cisco. Friends don't let friends buy Cisco in 2025. The nicer Nexus gear that competes with Arista also has a whole clusterfuck around licensing for ports, features and what features those ports have (depending on which exact line of Nexus switch, this changes!) and are generally going to be more expensive, more power hungry and more frustrating to use than Arista. Cisco Meraki would be a great option if it didn't brick itself the moment it looses licensing in a detestable display of e-waste generation and vulture capitalism. The Catalyst switches are fine. Ish. The less said about how they handle trunks, link aggregation and neighbor discovery the better. In the same way of "Nobody got fired for buying IBM" in the 70s or 80s, "Nobody got fired for buying Cisco" in the 90s and 2000s. Just save yourself the headache and go Arista.

"Okay but those are complicated and fancy, surely I don't need all that!" I hear some asking. Honestly? Maybe not. But do you want to have to replace an otherwise perfectly good piece of hardware when you need something unsupported? Probably not. This leads to the question of "Why/why not Unifi?". Unifi inhabits an awkward position of commanding a fairly premium price tag for a lot of creature comforts, but very few competitive advantages in useful features.

Sure, having RGB port lights is cool, I suppose. Or a nice, slick looking web UI and mobile app and "AR experience". Hey, Ubiquiti? Do you support OSPF yet? It's 2025. How about BGP? Oh... No? So no VXLAN or EVPN either I guess. Oh and you want $400 for that switch. Yeah no. Hard pass. Unifi looks pretty, and their wireless gear is actually pretty solid but their switching and routing is regularly beaten by Cisco gear from the late 90s in feature set. It looks good in a rack and is fine for fairly simple networks and absolutely has a place. I'll let you know if I figure out what it is.

Final thoughts on switching

Given that these devices are going to be the core of your network, they do merit some decent thought, however I wouldn't be too concerned about trying to find the "perfect" switch that handles every edge case you have. The perfect switch doesn't really exist. If you need a few 2.5Gb ports, a smattering of 1Gb ports, a couple 10Gb ports and PoE for a few more devices, I would strongly recommend looking at multiple switches. One switch can probably handle a combination of those needs, but rarely all at once. If it can, it likely comes at a substantial premium.

Some good resources to look at for reviews and more details would be Serve The Home which covers enterprise and homelab equipment ranging from cheap no-name switches up through massive, bleeding edge hardware and a lot in between. Their Mikrotik reviews are quite solid and their deep dives into the underlying technology from the big network vendors can be a good reference for other equipment. As mentioned in the hardware guide, the Project TinyMiniMicro series is another good resource. The Network Berg on YouTube does a lot of good content around Mikrotik and a few other vendors, along with some conceptual walk-throughs. A fair warning, some videos end up being fairly outdated given the rapid pace of Mikrotik development the last few years, but can still be a good place to get a feel for the hardware and software.

Layers 3 and 4: Network and Transport Layers

Layers 3 and 4 are where things start getting recognizable again with this being the realm of routers and firewalls. We're mainly going to focus on firewalls, as they can pull double-duty as routers in many cases as a necessity of their function for filtering traffic. Similar to the switches before, there are a few big categories for routers and firewalls, mainly determined by capability and associated hardware. Likewise, routers equipped with ASICs are going to be able to handle far more traffic than purely CPU bound "soft" routers, but do give up some flexibility for those speeds. Conversely, software-only routers can be run as a bare-metal operating system, a virtual machine or even container, but do suffer from much higher resource requirements and lower max throughput than their ASIC equipped counterparts.

The primary purpose of a router will be to join together multiple networks, whether this is a single local network and your WAN connection from the ISP, or a few dozen internal networks locally and VPN tunnels to cloud providers or your friends and their networks. (I'm not sorry for what I do to a friend's route tables) Now, one can be forgiven for asking why we'd need a separate router when we have L3 switches that seem to do the same thing. In the case of internal networking, this can be an entirely legitimate question. Where full on routers come in to play is when you need to deal with the broader Internet. For the home gamer, this usually is due to NAT. Given the comparatively low-power control planes of switches, the bigger CPUs and higher memory capacity is essential for dealing with NAT. The specifics of this will be a whole separate series, but is one of the key differentiating factors between switches and routers. The requirement for NAT on IPv4 networks, which most of us have, is really a firewalling thing but can be handled by a router. Once again, blame Cisco.

Firewalls are generally accomplishing the same thing: Connecting multiple networks. However, they are also tailored to be a security boundary between those networks. A firewall is almost always a router, but not all routers can be firewalls.

We're mainly going to touch on hardware routers first, as virtualizing a router is a bit different of a problem and merits some more specifics.

Router performance specs

In a recurring theme with the switches, picking a router that's capable of the throughput you need, has the interfaces needed to integrate with the rest of your network will be the key set of requirements. Most hardware routers from the usual suspects of Mikrotik, Arista, Cisco, etc will publish the specs for what their routers can handle for different protocols and types of traffic. The key things to look for will be the total throughput (in gigabits/second or terabits/second) and packet processing throughput (usually in thousands or millions of packets per second, kpps or mpps). These two specs are usually related to a point, but are not interchangable. Some types of traffic like DNS, remote logging or general web browsing may throw around a high volume of packets, but each one can be quite small compared to lower volume, larger packets like iSCSI or NFS storage, video streaming and the likes. Estimating the requirements for total packet/second throughput can be quite difficult without good instrumentation but the more the merrier is always welcome.

VPN Capabilities and Some Minor Security Concerns

Another important stat to check for will be VPN throughput if hosting a VPN server for remote access is desirable. A couple key things to watch out for will be what protocols are supported (Wireguard is generally the darling child of many) and whether the VPN is proprietary or not. SSLVPNs like GlobalProtect from Palo Alto and Fortigate VPN should be avoided both for reasons of licensing and some minor security issues.

Given that these protocols will not have as much hardware acceleration available and much higher overhead than slinging packets around, it is not unexpected to see dramatically lower throughput for VPNs than normal packet processing. Depending on how you design your network, this may end up being a non-issue or a deal-breaker. Personally I see decent VPN throughput as a "nice to have".

Stateful versus Stateless filtering

A major point of contrast between routers and firewalls comes down to how they're able to filter traffic. This comes down to stateful versus stateless filtering. A stateless filter, like a router ACL is only looking at traffic moving in a single direction. A basic ACL may look like "Allow 443/tcp from 192.168.0.0 to 192.168.1.0", however it will not allow traffic returning back, or related traffic to that initial flow. These router ACLs are incredibly coarse filters, but can be pushed down to the ASIC making them very, very fast. A stateful firewall rule may look something like "Allow new and established sessions on 443/tcp from 192.168.0.0 to 192.168.1.0". This will allow that return traffic from the other subnet and allow related sessions to be established. This is especially important for allowing firewall rules to flow in only one direction but allowing return traffic and traversing NAT. Given that NAT relies entirely on manipulating traffic state, a stateful firewall is necessary to allow interactions with NAT, making basic router ACLs ineffective at the edge of a network where NAT is involved.

Linux, BSD and Proprietary Firewalls

As mentioned before, the throughput of the firewall will be a critical factor in which specific model is desirable for a use case, however there are some additional factors at play. One of the biggest will be the base operating system and firewall used. These will all be stateful firewalls working at Layers 3 and 4, at a minimum, making the differences primarily ones of performance and management. Generally these fall into three main categories:

- Linux based with nftables/an eBPF flavor of nftables

- BSD based with the

pfpacketfilter firewall - Something bespoke to that vendor

These days, I would strongly recommend options 1 or 3, the Linux based firewalls have much better performance, can be more expressive and are generally easier to manage than their BSD counterparts. The bespoke options typically lean into more advanced features like Layer 7 filtering, deep packet inspection and in-line malware scanning, though these command a substantial price tag from Next Generation Firewall vendors like Palo Alto Networks, Juniper and Fortigate. Those will be a separate topic entirely as they function distinctly from L4 firewalls.

A core reason for this is that most pf implementations are interface-based as opposed to zone or network based. This means you're typically writing rules around what traffic can enter a network interface, and what traffic can leave it. This makes handling traffic where multiple networks may be behind a single interface (or multiple destinations may be reached) incredibly cumbersome and hard to troubleshoot. Firewalls like pfSense and OpnSense function in this way and are unnecessarily troublesome to manage because of it. Additionally, there are some hard limits on performance with pf due to a few single-thread bound components massively limiting throughput based on CPU speed. These single-threaded spin-locks occur in managing the state of traffic when moving between interfaces and networks, meaning that your limitation on network traffic is not for a single interface, but rather the entire firewall as a whole. Due to poor scaling of single-thread performance in this case, it leads to a fairly hard cap on throughput around 8-10Gb across the entire firewall. For simpler, lower-throughput networks this may not be a substantial issue, but is a hard limit on network performance that should be avoided if possible.

The Linux and nftables based firewalls get around this issue through a much more modern and performant network stack that can allow hundreds of gigabits and likely terabits of traffic through, with the proper hardware. Likewise, the ability to work with firewall zones as opposed to specific interfaces allows for a much greater degree of flexibility and expressiveness. Decoupling the physical interface from the traffic being processed makes handling more complex networks easier and allows rules to be more portable. An important note: Some firewalls will present their firewall rules in the older iptables style, however this has frequently been replaced under the hood for nftables and similar with iptables rules kept for interoperability and intelligibility with other firewall rules.

I would strongly recommend jumping right to a Linux based firewall and saving the headache of an outdated network stack, even if you do get a nice web UI for it.

Software Recommendations

The two main options we'll explore will be vendor-built options, primarily from Mikrotik, as well as operating systems you can throw on most any computer. Virtualized firewalls are being deliberately omitted due to some specific performance, management and deployment considerations but will be a topic of follow-up material.

Doing it "The Hard Way" - Bare Linux:

This will be a learning adventure to say the least, and will require building up a firewall from a bare Linux install, configuring a DHCP client and server, setting up interfaces and firewall rules along with any other additional functions that are desired. This will be the most flexible approach, however it does present the greatest challenge. I would only recommend this for the very experienced or a tinkerer that wants to really know what's going on under the hood. Personally, I've had decent success with running a standard distribution like Red Hat Enterprise Linux or derivatives, but any standard server distro like Debian, Ubuntu, RHEL or others should be fine.

Some Assembly Required - VyOS:

VyOS is an open-source router and firewall distribution based on Debian that comes with some of the initial setup completed already. By default, this will be command-line only, though some community projects do maintain a web UI if that is desired. This will give much of the same control as a bare Linux box, but takes some of the hassle and headache out of the equation. Static and dynamic routing, zone-based firewalls, DHCP and a variety of protocols for routing, VPNs and most of what someone would need are supported out of the box. This can be a solid option for someone looking to get the most out of their firewall hardware, but don't want a "project car"-like experience of a bare Linux firewall.

Batteries Included - Arista NGFW (Formerly Untangle):

Arista acquired the Untangle project several years ago and has re-branded it as "Arista NG Firewall" under the "Edge Threat Management" line. This has given some much-needed financial backing to the Untangle product, however it does come at a literal cost. The free version does include some basic firewalling features, a few VPN protocols and some more limited policy management, while the paid edition is much more feature-complete. This does still give a fairly high-performance firewall, more advanced features around application controls and can be a good first-step into NGFWs. This also comes with a functional, if not particularly attractive web UI and is far more turn-key than the previous options. Personally I have not used it since it was still Untangle and was not especially impressed (but not particularly disappointed either), though I have heard it has improved substantially in terms of usability, features and performance.

Hardware Options

Once you've picked out your OS of choice, you'll naturally need hardware to run it on. The basic requirements will be very much in line with the hardware recommendations in Part II, with one key difference: You'll need at least two NICs. While there are workarounds to use a single NIC (called a Router on a Stick), that does start to significantly limit your throughput and increases the complexity of the configuration. To start out, we're going to avoid that entire mess and just go with two or more NICs. One will be WAN-facing, the other LAN facing and expanding out will be a topic for later.

The actual hardware required will be largely the same as what would make a good home server, but you can be far more minimal on memory and storage. Unless you're doing a ton of logging, running a lot of other services and really loading down that server, a modern-ish processor with 8GB of RAM and an SSD from the last decade will be good enough to handle a couple gigabit of traffic, even with a lot of firewall rules and networks to wrangle.

A second option will be to go with a vendor-built solution from the usual suspects mentioned earlier. Options either pre-installed and configured like the Arista MicroEdge or NG Firewall Appliances save the need to get the proper hardware, while bigger systems from Palo Alto Networks, Juniper SRX firewalls or other big vendors can be picked up second-hand on Ebay or similar. These will have some non-trivial limitations, mostly around licensing and software updates will likely be non-existent so substantial effort will need to be made to work around those issues and mitigate vulnerable components. Personally I would be very careful with these devices and would recommend leaving them be until more experience is gained. That said, it is incredibly entertaining to confuse your ISP tech by answering "Where's your router?" with "Which one?" or "Oh, the Palo over there".

Many of the larger Mikrotik RB series devices and CCR series devices make excellent routers and firewalls for networks ranging from a few hundred megabit to tens or hundreds of gigabits. In particular the CCR2004s and higher make excellent options for handling larger, more complex networks while alleviating the compute and memory limitations of the CRS series switches. A device like the RB5009UPr mentioned earlier will also handle a substantial amount of network traffic through a fairly simple firewall assuming some performance tuning is done and the rule-base is kept fairly small. That said, for most home users, those limits will be entirely within what most will do.

NICs for your firewall

Short answer: Intel.

One of the crucial factors of how well a network appliance or server will pass traffic with the absence of an ASIC like one would find in a switch or router is the quality and capabilities of the NICs. This typically takes the form of hardware offloads for common packet processing tasks. Simpler NICs from Realtek, Aquantia and the likes will have few, if any, offloads and force more tasks onto the CPU. This will then increase the amount of time it takes to actually handle any single packet, increase processor utilization and generally make for a far slower, less efficient system. Higher quality NICs from Intel, Nvidia (Yes, the GPU people), AMD or Chelsio will have fixed function silicon similar to a switch ASIC on the NIC, massively increasing the efficiency of handling this traffic. It is not uncommon to see the same processor only able to handle a few hundred megabit from a Realtek NIC then able to handle tens of gigabits using high end Nvidia ConnectX or Intel XXV or E-series NICs.

Generally, Nvidia's ConnectX line of NICs are some of the best in the industry, however they heavily rely on a proprietary driver stack that seriously limits OS compatibility and can be quite "fragile" around kernel updates. These NICs are also frequently go End-of-Life where only old, unmaintained driver packages are available, preventing the use of the NICs on newer systems. This effectively forces the end buyer to regularly replace these NICs every few years with a newer model and have well-tested upgrade paths.

Intel NICs, on the other hand, tend to be supported much, much longer term. Options like the i350 or X520 (1GbE and 10GbE respectively) have been around for a decade or more and are likely going to still be getting driver updates when the only things left are cockroaches, COBOL and Twinkies. While not quite as heavy-duty as their Nvidia counterparts, these NICs are still highly capable and broadly supported on most any operating system. The combination of good offloads, long production runs and near-immortal drivers makes these a cheap, easy and generally solid option for servers and network appliances. The XXV and E-series NICs are solid options for >10GbE networking and I've personally had solid experiences with the XXV710s for 25GbE. Mainly that I forget what NICs I have in the systems and they keep passing traffic fast enough which is about as optimal as it gets.

Getting solid, dependable NICs will be key to having a performant, painless hardware experience for a firewall and a little bit of up-front investment pays dividends down the road. In a continuing theme of this article: Upfront investment is cheaper than replacing it down the road.

Wrapping Up

Oh, you're still here? Good job! Whew, that took a hot minute. With any luck, you've got a better idea of some of the nuts and bolts of network hardware and software, what to look for and what to avoid. Future articles will include some more specific deep-dives into a particular concept or vendor, along with some examples of how I and others are using these bits of gear in our labs and self hosting. Networking is a whole rabbit hole unto itself and can be a fun adventure, especially when you're getting into the stranger bits of it. The next steps will be going through actually configuring some of these devices and a look at how to secure them.

Until next time!